Researchers Enhance Self-Driving Vehicle Safety With Advanced Tracking Tools

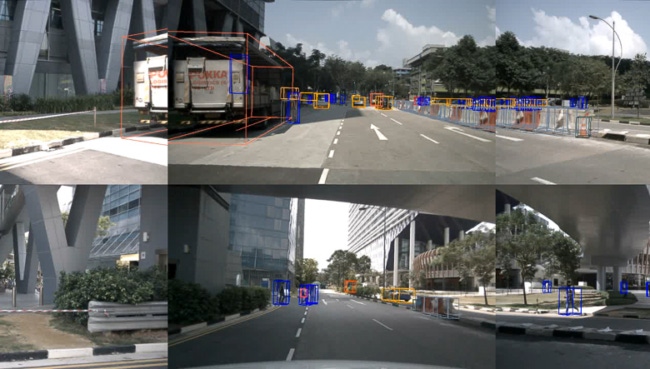

The work from the University of Toronto focuses on multi-object tracking, which helps self-driving cars navigate by tracking the position and motion of objects

Engineering researchers at the University of Toronto Institute for Aerospace Studies (UTIAS) are developing two high-tech tools aimed at enhancing the reasoning capabilities of robotic systems, including autonomous vehicles, which could improve their safety and reliability.

In particular, the work focuses on multi-object tracking, a key function of self-driving cars that allows them to navigate densely populated areas by tracking the position and motion of objects such as vehicles, pedestrians and cyclists. The tracking information the robot uses is collected from computer vision sensors — 2D camera images and 3D Lidar scans — and filtered at time stamps of 10 times per second to predict the future movement of the objects.

Sliding Window Tracker

The first tool, Sliding Window Tracker (SWTrack), allows robots to consider a broader temporal, or time, window, extending the input up to five seconds into the past. Each time the team extended the window, tracking performance improved. However, the program has its limits. Extending the window beyond five seconds produced a lag due to increased processing time.

The broader time window allows the robot to develop reasoning about its environment and plan ahead.

This helps to prevent missed objects and improve tracking methods, especially when objects are hidden — occluded — by other objects, such as a tree or another vehicle.

The research team behind SWTrack, UTIAS Professor Steven Waslander, PhD student Sandro Papias and third-year engineering student Robert Ren, tested, trained and validated their algorithm using nuScenes, a public, large-scale dataset for autonomous driving vehicles. They presented their results in a paper at the 2024 International Conference on Robotics and Automation (ICRA) in Yokohama, Japan.

“SWTrack widens how far into the past a robot considers when planning,”Papias told UT Engineering News. “So instead of being limited by what it just saw one frame ago and what is happening now, it can look over the past five seconds and then try to reason through all the different things it has seen.”

UncertaintyTrack

SWTrack’s capabilities dovetail with another UTIAS research project presented at ICRA, UncertaintyTrack, which helps quantify errors in multi-object tracking. Specifically, the program is a collection of extensions for the 2D tracking-by-detection paradigm, which is used in the majority of multi-object tracking methods.

Most of these methods, “blindly trust the incoming detections with no sense of their associated localization uncertainty,” the team, comprised of Waslander and master’s student Chang Won Lee, wrote in their paper.

UncertaintyTrack uses probabilistic object detection to quantify the uncertainty estimates of object detection, which can prevent errors in multi-object tracking, particularly in adverse conditions, like low light or environments with heavily occluded views. Using the Berkeley Deep Drive multiple-object-tracking dataset, UncertaintyTrack helped reduce the number of false identifications in multi-object tracking by approximately 19%, according to the paper.

“The key thing here is that for safety-critical tasks, you want to be able to know when the predicted detections are likely to cause errors in downstream tasks such as multi-object tracking,” Lee told Engineering News. “Uncertainty estimates give us an idea of when the model is in doubt, that is, when it is highly likely to give errors in predictions.”

About the Author

You May Also Like