Generative AI Video for Self-Driving Development UpgradedGenerative AI Video for Self-Driving Development Upgraded

Updated VidGen-2 offers double the resolution of its predecessor, with improved realism, multi-camera support

.png?width=1280&auto=webp&quality=95&format=jpg&disable=upscale)

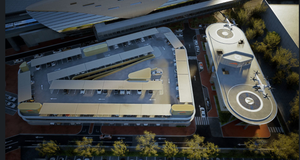

California-based start-up Helm.ai has released an updated version of its VidGen generative AI model for autonomous driving.

VidGen-2 follows the launch of VidGen-1 earlier this year, and as with the original, produces highly realistic driving video sequences.

The updated VidGen-2 offers double the resolution (696 x 696) of its predecessor, with improved realism at up to 30 frames a second, and multi-camera support.

Videos can be generated without an input prompt or from a single image or input video, and the step up in quality delivers smoother, more detailed simulations.

The result, said Helm.ai, is a cost-effective and scalable package for automakers who are working on developing and validating autonomous vehicles.

VidGen-2 is able to generate videos having been trained on thousands of hours of diverse driving footage using Nvidia H100 Tensor Core graphic processing units.

It leverages Helm.ai’s deep neural network architectures and the company’s own ‘deep teaching’ technique, large-scale unsupervised learning that it has been developing since 2016, to produce videos that cover multiple geographies, camera types and vehicle perspectives.

These are said to offer highly realistic appearances, time-consistent motion and accurate human behaviors and have the ability to cover a wide range of scenarios, including highway and urban driving, multiple vehicle types, interactions with pedestrians and cyclists, intersections, turns, changing weather conditions and lighting variations.

In multi-camera mode, the scenes are generated consistently across all perspectives.

However, according to the company, arguably the greatest advantage over conventional non-AI simulators is the speed at which the videos can be generated, which reduces development time and cost, always a major concern for automakers working on autonomous vehicles.

“The latest enhancements in VidGen-2 are designed to meet the complex needs of automakers,” said Vladislav Voroninski, Helm.ai CEO and co-founder. “These advancements enable us to generate highly realistic driving scenarios while ensuring compatibility with a wide variety of automotive sensor stacks.

“The improvements made in VidGen-2 will also support advancements in our other foundation models, accelerating future developments across autonomous driving and robotics automation.”

Helm.ai is one of several AI-focused companies that have risen to prominence in automated driving over the last 18 months. The company raised $55 million in series C funding last year, with investors including Honda and Goodyear Ventures.

About the Author

You May Also Like