Why AI for Cybersecurity Has a Spinal Tap Problem

Some of the hype surrounding AI for cybersecurity may be justified, but some vendors are still marketing “AI” as a sort of magical elixir.

March 28, 2019

There’s a famed scene in the mockumentary “This is Spinal Tap” that mirrors the abandon with which the term “artificial intelligence” is applied in modern tech marketing. In the film, guitarist Nigel Tufnel (Christopher Guest) shows off custom Marshall amplifier heads whose knobs top out at 11 rather than the customary 10. “Why don’t you just make 10 louder?” asked the film’s documentarian Marty DiBergi, played by Rob Reiner. Apparently dumbfounded, Tufnel responded: “These go to 11.”

In a similar manner, numerous tech firms are slapping the AI label on a host of undeserving products. Last year, The Guardian used the term “pseudo-AI” to refer to a number of companies that decided to publically prototype AI with undisclosed human helpers. For instance, a company might claim it uses AI to automate routine jobs such as analyzing objects in video feeds or text in receipts but enlist humans to perform those tasks in the background.

Or perhaps a company will simply believe what they are doing with AI is unique when it isn’t. “I always laugh when a new [cybersecurity] startup comes up and says: ‘We are novel by doing AI,’” said Zulfikar Ramzan, chief technology officer of RSA. “When I hear that, I think: ‘We’ve been using AI before your founder was born, practically, or at least since your founder was in elementary school,’” Ramzan joked.

In any case, many cybersecurity firms get swept up using the term AI for cybersecurity in their marketing without being clear what they mean. “Oftentimes, they don’t understand the nuance and the context that will make it work and how to apply it,” Ramzan said. “You can take a powerful tool and completely misuse it. I’ve seen it happen over and over again with machine learning and AI tools where people think they know how to apply it, and they just do a horrific job in practice because they don’t consider all the practical implications.”

[Internet of Things World is the intersection of industries and IoT innovation. Book your conference pass and save $350, get a free expo pass or see the IoT security speakers at the event.]

Ramzan is worried by the tendency of the cybersecurity industry to chase after trendy new technologies without careful deliberation. “I am seeing this left, right and center where a new buzzword will pop up and many people will think: ‘That’s what I need to go jump toward without really understanding what’s involved,’” he said.

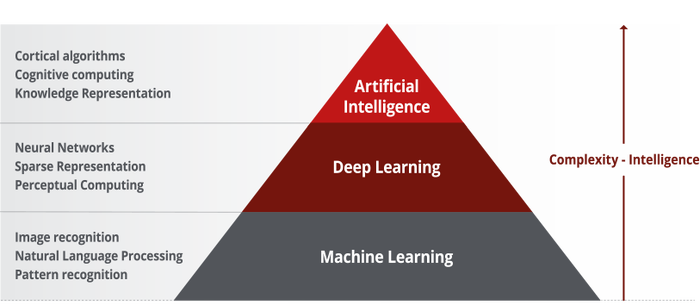

At the other end of the spectrum, some vendors brand relatively unsophisticated technologies using if-then-else statements or rudimentary machine learning statements as artificial intelligence. Grant Bourzikas, McAfee’s chief information security officer and vice president of data science applied research, recently met with a cybersecurity vendor who name-dropped “AI.” Bourzikas recalled asking the company: “‘What does AI mean? What are you running?’” The vendor’s spokesperson replied: “It is random forest,’” referring to the machine learning algorithm. When comparing various cybersecurity AI offerings, having a granular understanding of how those products compare, along with a model to put them in perspective, is indispensable. In McAfee’s view, machine learning and deep learning sit at the base of a pyramid with AI at the pinnacle.

McAfee’s model with AI at the pinnacle of the pyramid.

To be fair, you could argue that machine learning is a branch of the larger discipline of artificial intelligence. Gartner, for instance, takes a wide view of AI as the application of “advanced analysis and logic-based techniques, including machine learning, to interpret events, support and automate decisions, and take actions.” But Bourzikas and others favoring a narrower definition reserve the term for techniques that transcend lower-order analytics and statistics as well as machine learning and deep learning techniques. Rather than using AI as a sort of catch-all term, Bourzikas advocates for using narrower terms like machine learning and deep learning when appropriate, and reserving AI for more sophisticated applications. “I think that as an industry we’ve used the terms machine learning and artificial intelligence and deep learning very interchangeably, but there are our differences within them,” he said. Machine learning is “very transparent in terms of the weighting and the feature selection,” Bourzikas explained. Deep learning is “where you start to get into neural networks.”

In deep learning, there is an input layer on one side that accommodates data. On the other side is an output layer, which presents a final prediction or decision, as the Industrial Internet Consortium’s Analytics Framework explains. In between those layers is one or more hidden layers. “By using multiple hidden layers, deep learning algorithms learn the features that need to be extracted from the input data without the need to input the features to the learning algorithm explicitly as in the case of machine-learning algorithms,” explains the IIC document.

In machine learning, as well as AI, the data output is everything, according to Peter Tran, a cyber defense expert, who sees it first hand within the financial technology industry. “When you have trillions of dollars moving across the internet, the slightest anomaly matters. It can be an indicator of a cyberattack, financial fraud or other malicious activity,” Tran said. The global financial industry has relied on techniques such as deep learning “for some time now,” Tran said, whereas that same technology is at an earlier point of adoption in the cybersecurity industry.

While AI for cybersecurity, viewed broadly or narrowly, has potential to help the industry deal with thorny problems such as the shortage of qualified cybersecurity workers and the growing complexity posed by the proliferation of IoT and other networked devices, in many cases, organizations would be better served by first examining their attack surface and fortifying that. “AI is very buzzwordy right now [in cybersecurity]. It’s still something that’s neat from a vendor perspective, but nine times out of 10, your adversaries are going after low-hanging fruit,” said Adam Mashinchi, vice president of product management at Scythe. That low-hanging fruit could be a Windows XP machine in an industrial facility, a connected video camera with a default username and password or an employee clicking on a phishing link.

Compared with deploying AI to enhance an organization’s cyberdefenses, addressing such cyber threats is straightforward. And after a cybersecurity team has addressed such matters, they can clearly explain how they reduced that organization’s attack surface.

That’s not necessarily the case for companies deploying AI, machine learning or deep learning for cybersecurity. Any of these techniques have significant potential, but also may lead to outcomes similar to the Spinal Tap guitarist Nigel Tufnel who fails to be able to explain a fallacy related to the volume related to his guitar amplifier. If a neural network concludes that an individual is guilty of a crime, but the data scientists deploying it fail to understand why, that deduction won’t hold up in a court of law. “Even today, if we think something is malware or not malware, we need to be able to explain what the decision-making process is on how we knew that,” Bourzikas said. Maybe the algorithms in a machine learning model were poisoned by an adversary. “Really being able to articulate the message” is vital, he added. Explainable AI is “going to be something that this industry is going to have to pay attention to.”

About the Author

You May Also Like