MIT Researchers Develop Robotic Hand That Identifies Objects by TouchMIT Researchers Develop Robotic Hand That Identifies Objects by Touch

Using a built-in camera and array of sensors, the robotic hand can understand the objects it holds

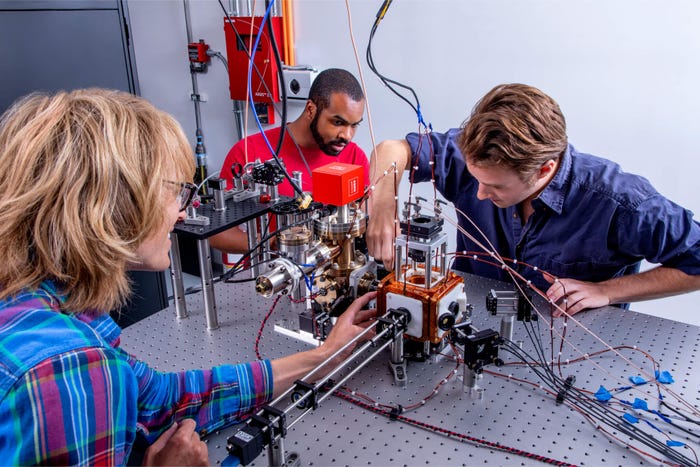

MIT researchers have created a robotic hand that can identify an object by grasping it just once.

Inspired by its real-life counterpart, the robotic hand features an array of high-resolution touch sensors to allow the robot to feel and understand the object before it.

The sensors are built into the hand’s soft outer layer, incorporated into its silicone “skin,” and use a camera and LEDs to gather visual information about an object's shape, providing continuous sensing along the entire length of the fingers. These sensors slightly overlap with one another to allow constant sensing capabilities.

The inner layer of the design is a rigid skeleton, which strengthens the hand and allows it to carry items.

"Having both soft and rigid elements is very important in any hand, but so is being able to perform great sensing over a really large area, especially if we want to consider doing very complicated manipulation tasks like what our own hands can do,” said study co-author Sandra Liu. “Our goal with this work was to combine all the things that make our human hands so good into a robotic finger that can do tasks other robotic fingers can't currently do.”

The team created a three-fingered robotic hand using this method, which in trials could identify objects after one grasp with an 85% accuracy.

In trials, the hand’s camera captured images of the object and sent them to a machine-learning algorithm trained to identify objects using raw camera image data.

The team said the design could be helpful in assisted care use cases, capable of lifting and moving heavy items and helping an elderly individual complete daily tasks. In the future, they also hope to improve the design’s durability and capabilities.

"Although we have a lot of sensing in the fingers, maybe adding a palm with sensing would help it make tactile distinctions even better," said Liu.

This work was supported by the Toyota Research Institute and the Office of Naval Research.

About the Author

You May Also Like