Connects decision-makers and solutions creators to what's next in quantum computing

Quantum Aims to Interpret Large Language Models

Researchers integrate quantum computing with AI for interpretable text-based tasks such as answering questions

New research has demonstrated that quantum artificial intelligence can show how the large language models that power chatbots like ChatGPT come up with their answers.

Interpretability is one of the cornerstones of responsible AI, but many systems are essentially “black boxes,” meaning users cannot understand how AI produces wrong answers and fixes them.

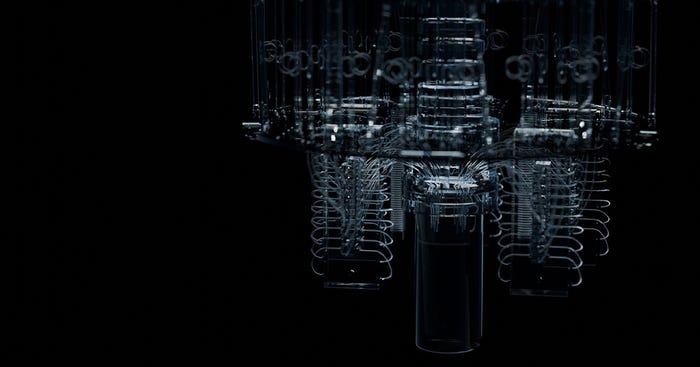

Quantinuum researchers published research describing how they combined quantum computing with AI in text-level quantum natural language processing (QNLP) for the first time to make AI interpretable.

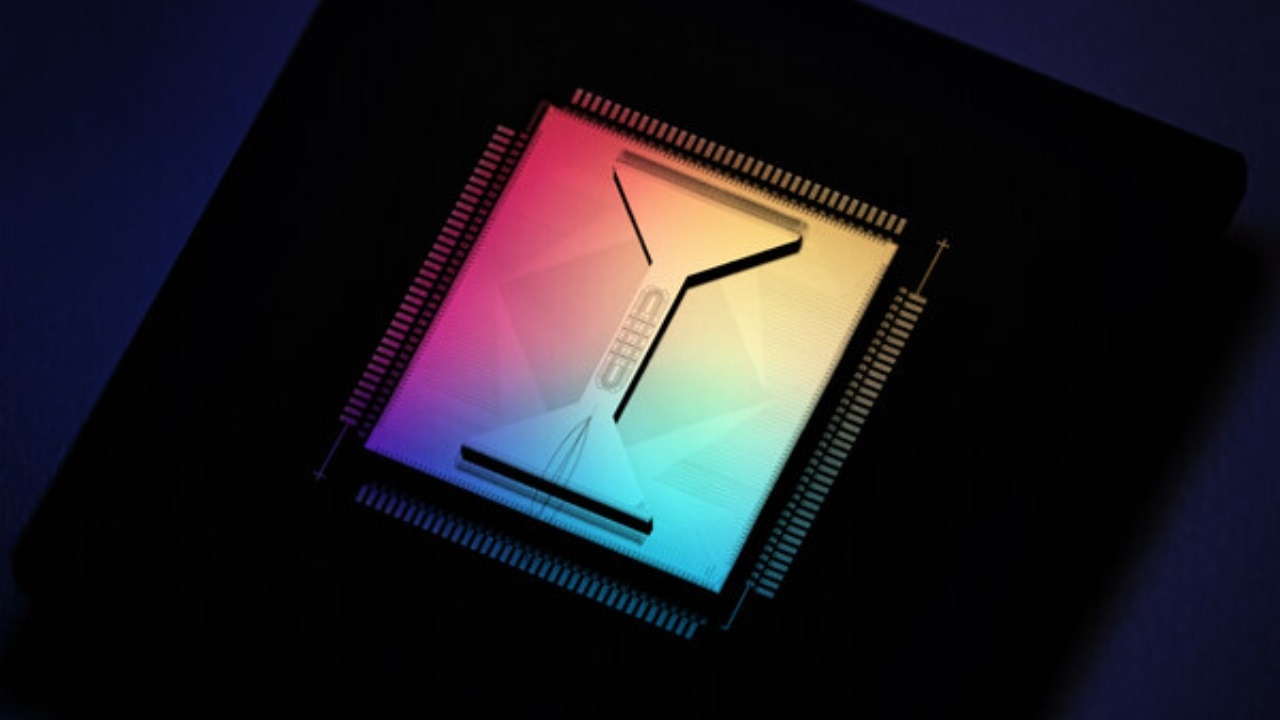

They developed a new QNLP model, QDisCoCirc, which they used to carry out experiments that proved it is possible to train a model for a quantum computer in an interpretable and scalable manner for text-based tasks.

The team targeted “compositional interpretability,” meaning that it can assign human-friendly meaning to the components of a model to understand how they fit together.

This makes understanding how AI models generate answers transparent, which is essential for applications like health care, finance, pharma and cybersecurity. AI is increasingly under legislative and governmental scrutiny to ensure it behaves ethically and its responses can be explained.

Quantinuum made it scalable using “compositional generalization,” training small examples on classical computers and then test much larger examples that classical computers cannot simulate on quantum computers.

“The compositional structure allows us to inspect and interpret the word embeddings the model learns for each word, as well as the way in which they interact,” the researchers said in the paper. “This improves our understanding of how it tackles the question-answering task.”

This compositional approach also avoids the trainability challenges posed by the “barren plateau” problem in conventional QML. This is where the slope representing how well a model’s predictions match the actual data becomes almost completely flat as the system gets larger. This makes it difficult to find the right direction to improve the model.

The new method makes large-scale quantum models more efficient and scalable for complex tasks.

The researchers demonstrated this using Quantinuum's H1-1 trapped-ion quantum processor, which they said constitutes the first proof of concept implementation of scalable compositional QNLP.

“Earlier this summer we published a comprehensive technical paper that outlined our approach to responsible and safe AI—systems that are genuinely, unapologetically and systemically transparent and safe,” said Quantinuum founder and chief product officer Ilyas Khan.

“In that work, we foreshadowed experimental work that would exhibit and explain how this could work at scale on quantum computers. I am pleased and excited to share this next step with extensive published details that are now also in the public domain. Natural language processing remains at the heart of LLMs and our approach continues to add substance to our ambitious approach and further expands the waterfront in terms of our work on a full stack extending from the quantum processor to the applications from chemistry to cybersecurity and AI.”

About the Author

You May Also Like

.png?width=100&auto=webp&quality=80&disable=upscale)

.png?width=400&auto=webp&quality=80&disable=upscale)