Connects decision-makers and solutions creators to what's next in quantum computing

Quantum Computing to Offer Real Commercial Benefits ‘by 2024’

Six takeaways from IBM’s quantum computing update

.jpg?width=1280&auto=webp&quality=95&format=jpg&disable=upscale)

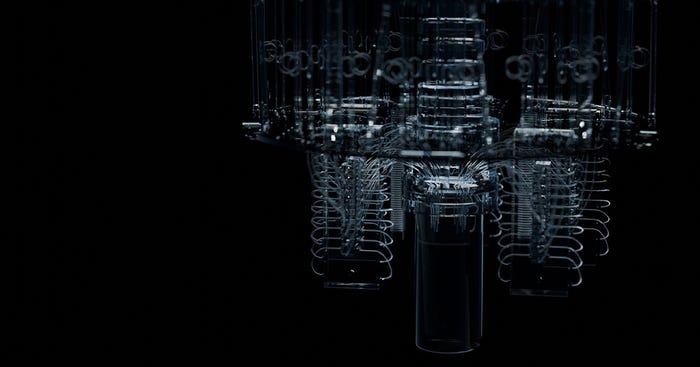

IBM held a quantum summit earlier this month, at which the company shared details of its newly announced 433-qubit Osprey processor and fleshed out how it will achieve the milestones set out in its quantum roadmap.

At an event in London this week, IBM distinguished engineer and quantum ambassador Richard Hopkins explained what IBM’s quantum innovations mean for companies looking to adopt the technology in the near term.

Here are some key takeaways from the event in Hopkins’ own words.

Useful Quantum Computing on the Horizon

Quantum is being seen as an industry enabler on a global scale. The key message that came out of the summit is our mission of bringing useful quantum computation to the world has moved forward. We're now in a position where we’re improving the error rate in our chips by an order of magnitude. This means that we're getting very close to the point where we’re using quantum for commercial use cases.

Osprey Processor Launched

The main thing we announced was the Osprey chip. We've gone from 127 qubits on the Eagle quantum processing unit (QPU) to 433 qubits on a single chip.

That chip is already working at the same level of accuracy as our previous generation. The new one is the size of an A5 piece of paper, and the 433 qubits mean a three-fold increase within a year at the same level of accuracy.

It's running about 10 times the speed - the way we measure that is circuit-layer operations per second (CLOPS). Within the year we've gone from 1,400 CLOPS to about 15,000 CLOPS, so it's a huge change. To do that, we've had to start changing the design of the control hardware to one based on ribbon cables.

Quantum has a different power footprint from normal computing. Every one qubit adds a linear amount of power to the machine but doubles the amount of processing capability of the machine, potentially, as everything's entangled.

By 2025, we want to have 4,000 qubits in a single machine, so we'll have to go to the next level of control hardware. That hardware is going to get moved inside the cryogenic chamber, which means it can run at about 10 milliwatts for every four qubits. The idea is to turn this into a hugely efficient, quantum-centric supercomputer.

Introducing Modularity and System Two

We've announced that we're going to move away from a single QPU chip to using multiple chips together to enable us to tackle bigger and harder problems. We've got four different mechanisms by which we're going to link quantum computers together, which is key to getting the scale that's required to tackle real-world problems.

To begin with, we're going to simply connect the machines using classical conventional hardware to allow the control systems to be coordinated so that a QPU could be working on a problem for a client, and another QPU can take the next step in that job. The information that's needed is flowing between those chips using conventional hardware.

Later on, we're going to be linking the chips directly, in much the same way that we do with normal chips today. Quantum computers use gates with different numbers of qubits on them. We'll have two-qubit gates that span the chips so you can link chips together in an efficient way.

Then an even greater level of scalability is achieved using micro signals that will flow between the chips inside the machine itself, so you can start stacking the chips into 3D arrangements.

The final link is to connect quantum computers using fiber optics, which enables you to connect machines through an entangled link. And those will enable us to link multiples of our next-generation Quantum System Two machines. The idea is that you start putting more and more QPUs using one wrap so you get to the 4,000 qubits and then you can connect at least another two machines together to get to a machine of 12,000, maybe 16,000 qubits.

Those quantum-centric supercomputers will also bring in racks that have coprocessors on them that are either conventional computers or graphics processing units (GPU) to work with supercomputers within the same domain. You'll be able to split algorithms over conventional GPUs and quantum to be able to solve problems you can’t solve in any other way.

Middleware and Error Correction

Bringing more QPUs into the equation adds to the complexity. We’re introducing a level of middleware, which essentially gives us access to the system using serverless technology. It gives you the ability to write your algorithms without knowing how they're going to run because the system will lay them out for you automatically.

You can literally twist a resilience knob, as it's called, to determine how much error correction you're going to run on those algorithms. The idea is that you will be able to develop using minimal resilience and then what do you want the real answer you turn the resilience up to three to get the answers out.

That combination of modularity, middleware and error correction is the thing that will get us to the point where we can run commercial, viable capabilities on quantum computers.

Finance Use Cases

As an example of how close that is, HSBC joined the Quantum Accelerator program earlier this year and now Crédit Mutuel is the first French bank to join. They’re quoted as saying they took a big bet on AI six years ago, and it's paid off for them. They see quantum in the same kind of light, that this is something that is going to make improve the quality of service for their customers and their members.

Austrian bank Erste Digital is building its quantum capability to improve the accuracy and reduce the complexity of fraud management, fraud detection, risk management and high-frequency trading capabilities. They're looking at how they apply this to the futures market.

And finally, Goldman Sachs. Earlier this year we published a paper that looked at machine-learning techniques on quantum computers. When you did an ensemble algorithm combining quantum with conventional and you brought them together, it was performing more accurately than state of the art.

Goldman Sachs has worked out how to use this parallelization of quantum technology to reduce the demands they need to model derivatives in the hedge fund market. That recognition that parallelization is going to be the way forward and that's going to reduce the time taken to bring quantum into a position where it can deliver real business value for them is a major announcement.

Real Commercial Benefits by 2024

We think that in 2024, or thereabouts, we should be in a position to run circuits of 100 cubits by 100 gates depth, and those will deliver real commercial benefits. That will be on today's noisy intermittent-scale quantum (NISQ) hardware but with error mitigation and suppression built in, to be able to get precise answers out of them, which will make all the difference in the financial industry space.

About the Author

You May Also Like

.png?width=100&auto=webp&quality=80&disable=upscale)

.png?width=400&auto=webp&quality=80&disable=upscale)