Pursuing Disruptive Innovation in AI

While artificial intelligence (AI) is a highly discussed and a hotly disputed topic these days, its implementation in ChatGPT is getting a lot of attention and praise.

June 26, 2023

.png?width=1280&auto=webp&quality=95&format=jpg&disable=upscale)

Sponsored Content

By Bernard Burg, director of AI and Data Science at Infineon Technologies

While artificial intelligence (AI) is a highly discussed and a hotly disputed topic these days, its implementation in ChatGPT is getting a lot of attention and praise. The Generative Pre-trained Transformer (GPT) in ChatGPT is a type of large language model (LLM) that requires a considerable amount of computing power. As a leading supplier of the computing and other semiconductor technologies involved in and required for the use of AI, Infineon Technologies’ perspective should be helpful to many in the design community seeking to increase their involvement and take advantage of AI. This article will provide background and insight into AI, including timing, risks and current and future expectations.

Background of ChatGPT and LLMs

Machine learning (ML) collected its first successes in specific vertical market applications. For example, in 1997, Deep Blue, a supercomputer, won against Kasparov, the reigning chess world champion. Around 2010, the IBM Watson a question-answering computer system won on the quiz show “Jeopardy!”. With Siri and then Alexa, natural language processing (NLP) models took off. In 2015, deep learning models surpassed humans in image classification like the detection of skin cancer and reading a magnetic resonance imaging (MRI) scan. More recently, they have been used in autonomous vehicle programs for self-driving cars.

After building all these specific vertical products, researchers turned towards consolidation and built one single model to solve all problems once for all. To do so, they built a device that learns based on all information in the web, including Wikipedia and books. Called Large Language Models (LLMs), the best known LLM is ChatGPT (3.0 and 3.5) from OpenAI. Experts believe LLMs will soon surpass human intelligence. Currently there are over 30 LLMs including PaLM from Google, LLaMa from Meta AI, GPT-4 from OpenAI and many others.

While any question can be posed to ChatGPT, an interesting initial question could involve planning a trip, for example to visit Paris. Specifically, the question could be, “give me five tourist sites in Paris.” The question can easily be revised to request 10 sites by simply saying, “make it 10.” The answer does not repeat the previous response but simply adds to it as though conducting a normal conversation. It can easily summarize the results in a table. Then a trip to visit the highlights can be planned with stops in the morning and afternoon. The first response suggested a five-day trip. Then, a user required addition can easily be worked into the schedule. A first-time user is impressed with the ease of interacting with and quality of the responses from ChatGPT. It is like chatting with a friend or colleague.

Timing and Growth

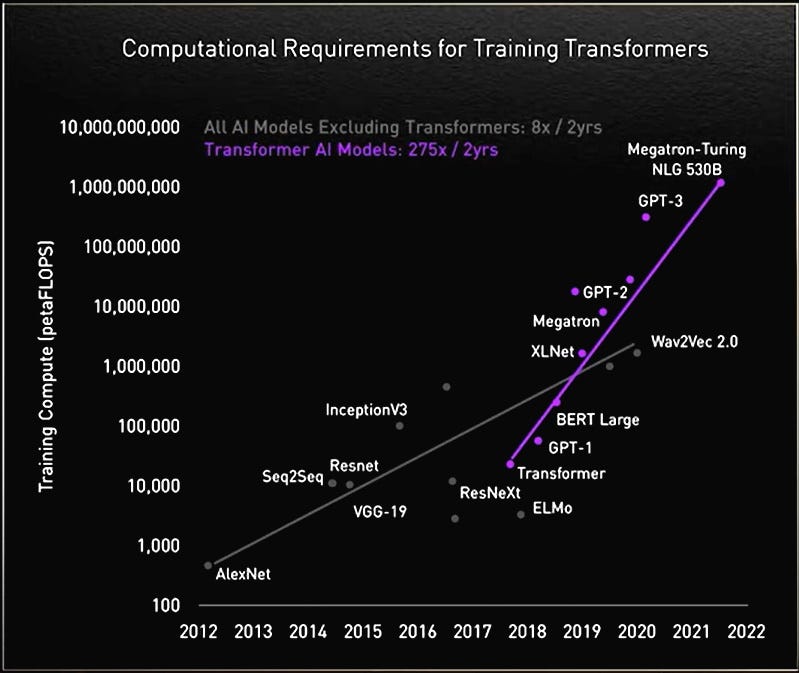

The timing for LLMs is based on the availability of extensive computing power. As shown in Figure 1, training the transformer has meant a 105 exponential increase in floating point operations per second (FLOPS) since 2017. The model sizes grew by a factor of 1600x in just the last few years. Getting an answer or inference on GPT-3 from a desktop central processing unit (CPU) could take 32 hours but requires only 1 second on an Nvidia A100 class graphics processing unit (GPU). Also, training a GPT-3 could take 30 years on a single of these GPU. The training is typically performed in large clusters of 1,000 to 4,000 GPUs in from two weeks to as few as four days with the cost of the training ranging from $500,000 to $4.6 million for a single learning iteration. Since the availability of such massive computing resources was reserved to a very small circle, its democratization is fairly recent.

Figure 1. The history of the computational requirements for training transformers. Source: Nvidia

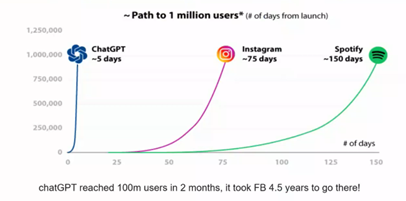

The significance of LLMs can be conveyed by the adoption rate of ChatGPT. As shown in Figure 2, unlike Instagram or Spotify that took 75 and 150 days, respectively, to reach usage of 1 million users, ChatGPT reached this milestone in only 5 days. In fact, compared to the 4.5 years it took for Facebook to reach 100 million users, it took only 2 months for ChatGPT. This is an unprecedented adoption rate for a technology product.

Figure 2. ChatGPT’s market acceptance dwarfs other internet-based tools. Source: Google

How ChatGPT Works

ChatGPT can be thought of as a probabilistic sentence generator - a structured, most-common sentence generator. It provides the most likely response to the next word in a sentence and the context can change based on the amount of information provided. Based on what was said before, it will respond with (guess) the next word. For example, GPT3 looks at the previous 2,000 words (about 4 pages) to make a decision on the next word. In contrast, GPT3.5 looks at about 8 pages and GPT4 looks at about 64 pages before making a decision for the next word. With these recent improvements, the “best guess” at the next word can improve dramatically. A simplified process for an LLM is:

Step 1: Learn foundation model that has detailed information on different topics.

Step 2: Pre-prompt provided by the LLM owner or others, to define the ‘personality’ of the chatbot (friendly, helpful…) as well as define toxic domains to avoid, etc.

Step 3: Fine-tuning provides additional input and value from a specific perspective.

Step 4: Reinforcement learning from human feedback (RLHF) filters out some responses.

To provide a response, a tool like ChatGPT responds quickly by capturing what the user inputs and then, in many cases, calling out an application programming interface (API). The API accesses information from another server, often through a licensing fee, to provide the answer. This could take additional iterations.

An LLM tool does not deserve unquestioned loyalty from users – there are risks associated with its usage. While it can provide very nicely packaged responses to many inquiries (The Good), it can be wrong (The Bad), and, in fact, very wrong (The Ugly) in some instances because the user does not know what filters have been applied to skew or even misdirect the response.

Getting Systems Expertise for AI

As a leading semiconductor systems solutions provider, Infineon Technologies addresses many critical industries including safer and more efficient automobiles, more efficient, greener energy conversion, connected and secure IoT systems and power management, sensing, and data transfer capabilities for these and many other industries. For example, Infineon power systems are part of the DC-DC solutions that allow the delivery of 1000’s of Amperes to the GPU. In addition, a variety of system-on-chip (SoC) solutions exist for memory, advanced automotive, and other applications.

With the recent acquisition of Imagimob AB, a leading platform provider for machine learning solutions for edge devices, Infineon Technologies is well positioned to address the fast-growing market for Tiny Machine Learning (TinyML) and Automated Machine Learning (AutoML), providing an end-to-end development platform for machine learning on edge devices. Customers can access the experience in these various applications, including LLM.

AI Outlook

While it may be controversial, it is an established fact that artificial intelligence is already here and continually increasing in usage. As the design community seeks to increase their involvement and take advantage of AI, they should look to an established systems solutions provider, like Infineon. Leveraging the advantages of market leadership in many areas as well as AI/ML, customers can bring their products to market quickly by building on an extensive, advanced sensors and IoT solutions portfolio.

You May Also Like