A Building Block Approach to AI Hardware and Software

Evolving data processing demands are beginning to lead to demand for templatized AI hardware and software deployments.

June 3, 2019

It’s true. Artificial intelligence, 5G and edge computing are all buzzwords. But each of those themes, taken individually or in tandem, could reshape how enterprise and industrial companies process data.

“AI is moving a lot of the processing power closer to the edge, and taking a lot of the data from IoT devices,” said Steven Carlini, vice president of innovation and data center at Schneider Electric in an interview at IoT World. “But a lot of the processing is moving toward the edge because the sheer amount of data that needs to be compiled is impossible to send back and forth between the cloud.”

The difficulty in benchmarking cloud-based processing and storage is another factor convincing some organizations to invest in edge computing or on-prem data centers. “We have some customers that have reached bills of $500,000 a month,” said Matt Burr, vice president and general manager of Pure Storage’s FlashBlade unit. “They’re looking to bring [some of their workloads] back down on-prem,” he added, stressing that the pendulum is by no means completely swinging back in the on-premises direction.

When asked to predict what the interplay might look like between edge and cloud in the near future, Burr said: “It’s too soon to actually know.” For now, the majority of Pure Storage’s customers use more of a centralized computing model — whether it is in the cloud or a data center. But on the flipside, the company’s executives are noticing an uptick in demand from customers wanting to deploy powerful computing technology in a whole host of locations.

Brian Schwarz, vice president of product management at Pure Storage said: “We’ve had customers call us and ask: ‘Can I put your [solid-state array] device on my oil rig in the middle of the ocean? It’s really important that I have sensor data so I can know that drill bit is operating well at the bottom of the ocean. And by the way, it’s so much data, and it’s far away from land where I can’t get a really fat pipe from a network connection.’” The company has received similar requests from customers wanting to install its hardware into autonomous vehicle prototypes, truck beds and airplanes. The growing variability in use cases in sparking demand for common strategies to deploy computing regardless of location, but computing at the edge can be difficult.

Don’t Push Me Cause I’m Close to the Edge…

One of the chief selling points of cloud computing in the first place was its potential to help organizations save money, but the cloud’s potential to support scale can lead to unexpectedly large cloud bills, as CloudTech recently observed. While cloud computing is here to stay, edge computing promises to help organizations avoid overreliance on the cloud.

But especially data-hungry organizations with edge and AI ambitions must grapple with how to cool and power the super high-power computers deployed locally. “Usually, in a data center when you’re doing AI, it’s with GPUs,” Carlini said. “Moving that to the edge is a big challenge, and it’s something we’re very involved with. How are you going to deploy that much computational power?”

An organization might plan to deploy a dozen new miniature data centers at the edge. “They are going to need 15 kilowatts of power and some kind of cooling for that,” Carlini said. For the sake of context, AFCOM, the data center organization founded in 1980 as the Association for Computer Operations Management, breaks data center density into categories ranging from low (4 kW per rack and lower) to 16 kW per rack on average or higher on the extreme end.

Organizations on the higher end of that spectrum are gravitating toward liquid cooling rather than air cooling, regardless of whether that environment is in a traditional data center or an unconventional edge environment. “We’re developing prototypes for liquid cooling for edge applications with AI, just specifically for AI applications with GPUs,” Carlini said.

In addition to AI hardware building blocks in the form of processors, memory and other technologies, virtualization is another important aspect that has special relevance for the edge, said Scott Nelson, chief product officer and vice president of product at Digi International. “If I’m going to enable edge compute, I have to partition. I have to make sure that the compute doesn’t interfere with my purpose,” he said. In hard real-time systems hypervisors manage the sophisticated partitioning between memory and compute functions. “For instance, if you’re running an analytics piece of software on [an unmanned aerial vehicle] that has the same processor running the autopilot, you have got to be careful you don’t mess up the autopilot,” Nelson said. “In most cases, if the UAV has any mass, the FAA is not going to allow you to do that unless you physically partition it.”

A Building Block Approach Emerging for Centralized Computing

In general, surging data processing demands are driving demand for standardized AI hardware and software — especially in traditional data center and cloud environments. Oftentimes, this standardization is a result of necessity. “The number one reason people bring us in is when they actually run out of power,” Carlini said. “Those organizations can’t draw more power from the utility but they want more information technology.”

Large internet companies with growing data center operations are also helping establish best practices for other companies with burgeoning IT demands. “The internet giants publish just crazy efficiency numbers. Our customers call us and ask: ‘Well, how did they get there?’” Carlini said.

Schneider Electric has reference designs and digital tools to help companies model out data centers. Such models provide a sense of what the efficiency and availability of a data center will be along with the cost. The company also offers prefabricated data centers. “Here, we guarantee efficiency, availability and cost because we’re building them in the factory,” Carlini said.

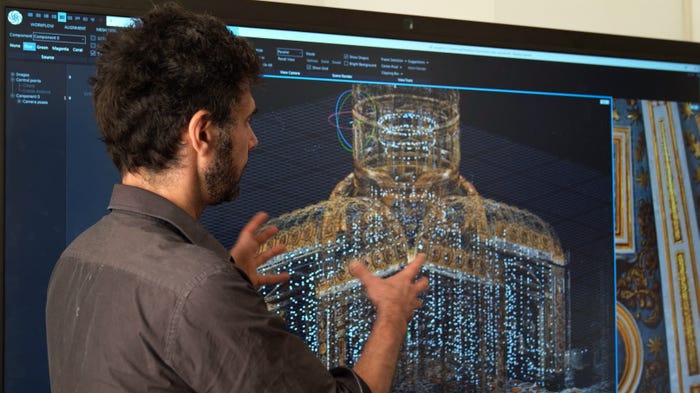

Similarly, Pure Storage offers what it calls “AI-ready infrastructure” or AIRI. “It’s a machine learning/deep learning environment that we partnered up with Nvidia on,” Schwarz said. The intent behind it is to enable organizations to build an AI supercomputer based on the experience of dozens of companies who have deployed such technology before. “You don’t have to learn this from the base particles,” he said. “You can have your AI people work on the hard part, which might be training a car to recognize the millions and millions of instances that it might encounter on the road.”

Although the IoT as a technological trend is no longer novel, parallel interest in AI and, more recently, 5G from a variety of enterprise and industrial companies is changing how many of such entities approach computing. “The question is: Will disparate vendors aggregate their capabilities, and leverage them in a partnership?” Nelson said. “What will they do with these building blocks as they develop them?”

How Much of an AI Enabler Will 5G Be?

5G may be at an early stage of adoption at present, but the technology could potentially have significant ramifications for enterprise organizations with AI or edge computing ambitions, and could serve as a platform for artificial intelligence applications.

“There are discussions of 5G nodes on 50 meter-square grids in order to get coverage,” Nelson said. Although the 5G infrastructure will take years to build out, when it is rolled out, the network will be ultra dense — at least in most geographical areas with substantial populations.

“Because of the bandwidth, and the capabilities of 5G as a network, every one of those nodes is going to have, relatively speaking, an incredible amount of compute,” Nelson said.

Especially for autonomous vehicles, the two technologies could converge to help support real-time decision making. The overlap between AI and 5G “is an everyday subject for [the self-driving car] segment, but it still a relatively small part of IoT in general,” Nelson said. “At this point, I would say there is more talk than traction [regarding the AI-5G convergence] outside of self-driving vehicles.”

Telcos managing 5G networks will be able to virtualize that computing capability over time. “They’ll be able to effectively deploy what would be a distributed server architecture — a distributed data room,” Nelson said.

When carriers have such a dense network of relatively high-speed processing, they will have the potential to distribute and virtualize that computing horsepower. “It will enable, effectively, another cloud,” Nelson explained. “It will truly be a fog,” he added, referring to the term whose meaning is nearly synonymous with edge computing. “What is fog? It’s a cloud at ground level. What’s 5G going to be? It’s going to be the same thing.”

It is uncertain, however, how long it will take for the full build-out such a distributed computing network, but 5G will put edge data centers near towers at the base stations or in traditional telco central offices. “The carriers are going to need these local data centers within each one of these clusters,” Carlini said. “The question is who’s going to deploy those data centers because the carriers are spending a lot of money now on spectrum.”

Once the 5G spectrum is up and running, carriers will shift to fill it with data. “Once they’ve deployed this capital, then they’ll switch to monthly recurring revenue,” Nelson said. “They’ll say: I’m moving data through my SIM cards, what else can I do?” To date, carriers have struggled to wrest the cloud computing market from the likes of Amazon, Microsoft and Google. But 5G could potentially enable telcos to offer rent edge computing — providing a building block for local IoT deployments. “It may not be coincident with the 5G launch, but someone will say: ‘Hey, if we virtualize these nodes together, then it looks like we have an entire data room on the west end of Manhattan. Why don’t we just call that the Manhattan cloud and see what we can do with it?’” Nelson said.

While skeptics decry 5G as overhyped, it is too soon to decide what its role for IoT deployments may be. “Look at all the sensor data in this new IoT world. There’s some belief that it’s going to get connected through 5G network,” Schwarz said.

Whatever the case may be, the edge model seems poised for acceleration in years to come — whether that involves processing in a base station or closer to the point of data generation. “You’d prefer to do everything centrally if you could,” Schwarz said. “It’s actually a cleaner model for any kind of industry or company to deploy. But that approach often doesn’t meet the business requirements at some level. And that’s where you get into this: ‘You got to push stuff out to the edge.’”

About the Author

You May Also Like

.jpg?width=300&auto=webp&quality=80&disable=upscale)